- Information

- AI Chat

Chapter 9 Pages From Advanced Topics in Java-Copy

Java Programming (JP 01)

University of Kerala

Recommended for you

Students also viewed

Preview text

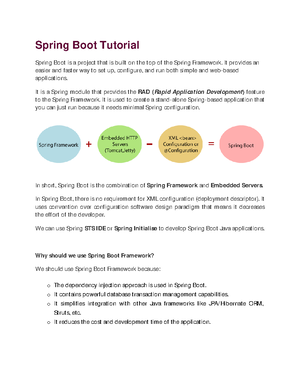

Advanced Topics in

Core Concepts in

Data Structures

Java / Chapter- 9

CHAPTER 9

Advanced Sorting

In this chapter, we will explain the following:

• What a heap is and how to perform heapsort usingsiftDown

• How to build a heap usingsiftUp

• How to analyze the performance of heapsort

• How a heap can be used to implement a priority queue

• How to sort a list of items using quicksort

• How to find thekth smallest item in a list

• How to sort a list of items using Shell (diminishing increment) sort

In Chapter 1, we discussed two simple methods (selection and insertion sort) for sorting a list of items. In this chapter, we take a detailed look at some faster methods—heapsort, quicksort, and Shell (diminishing increment) sort.

9 Heapsort

Heapsort is a method of sorting that interprets the elements in an array as an almost complete binary tree. Consider the following array, which is to be sorted in ascending order:

We can think of this array as an almost complete binary tree with 12 nodes, as shown in Figure 9-1.

1

37 2

25 3

43 4

65 5

48 6

84 7

73

num

8

18 9

79 10

56 11

69 12

32

Continuing at node 3, 43 must be moved. The larger child is 84, so we interchange the values at nodes 3 and 6. The value now at node 6 (43) is bigger than its child (32), so there is nothing more to do. Note, however, that if the value at node 6 were 28, say, it would have had to be exchanged with 32. Moving to node 2, 25 is exchanged with its larger child, 79. But 25 now in node 4 is smaller than 65, its right child in node 9. Thus, these two values must be exchanged. Finally, at node 1, 37 is exchanged with its larger child, 84. It is further exchanged with its (new) larger child, 73, giving the tree, which is now a heap, shown in Figure 9-3.

9.1 The Sorting Process

After conversion to a heap, note that the largest value, 84, is at the root of the tree. Now that the values in the array form a heap, we can sort them in ascending order as follows:

• Store the last item, 32, in a temporary location. Next, move 84 to the last position (node 12), freeing node 1. Then, imagine 32 is in node 1 and move it so that items 1 to 11 become a heap. This will be done as follows:

• 32 is exchanged with its bigger child, 79, which now moves into node 1. Then, 32 is further exchanged with its (new) bigger child, 69, which moves into node 2.

Finally, 32 is exchanged with 56, giving us Figure 9-4.

79

69

56

84

73

65 43 37

18 25

1

2 3

4 5 6

8 9 10 48 32

11 12

Figure 9-3. The final tree, which is now a heap

69

56

32

79

73

65 43 37

18 25

1

2 3

4 5 6

8 9 10 48 84

11 12

Figure 9-4. After 84 has been placed and the heap is reorganized

At this stage, the second largest number, 79, is in node 1. This is placed in node 11, and 48 is “sifted down” from node 1 until items 1 to 10 form a heap. Now, the third largest number, 73, will be at the root. This is placed in node 10, and so on. The process is repeated until the array is sorted. After the initial heap is built, the sorting process can be described with the following pseudocode:

for k = n downto 2 do item = num[k] //extract current last item num[k] = num[1] //move top of heap to current last node siftDown(item, num, 1, k-1) //restore heap properties from 1 to k- end for

where siftDown(item, num, 1, k-1) assumes that the following hold:

• num[1] is empty.

• num[2] to num[k-1] form a heap.

Starting at position 1, item is inserted so that num[1] to num[k-1] form a heap. In the sorting process described above, each time through the loop, the value in the current last position (k) is stored in item. The value at node 1 is moved to position k; node 1 becomes empty (available), and nodes 2 to k-1 all satisfy the heap property. The call siftDown(item, num, 1, k-1) will add item so that num[1] to num[k-1] contain a heap. This ensures that the next highest number is at node 1. The nice thing about siftDown (when we write it) is that it can be used to create the initial heap from the given array. Recall the process of creating a heap described in Section 9.1. At each node (h, say), we “sifted the value down” so that we formed a heap rooted at h. To use siftDown in this situation, we generalize it as follows:

void siftDown(int key, int num[], int root, int last)

This assumes the following:

• num[root] is empty.

• last is the last entry in the array, num.

• num[root2], if it exists (root2 £ last), is the root of a heap.

• num[root2+1], if it exists (root2+1 £ last), is the root of a heap.

Starting at root, key is inserted so that num[root] becomes the root of a heap.

Given an array of values num[1] to num[n], we could build the heap with this pseudocode:

for h = n/2 downto 1 do // n/2 is the last non-leaf node siftDown(num[h], num, h, n)

We now show how to write siftDown.

We can now write heapSort as follows:

public static void heapSort(int[] num, int n) { //sort num[1] to num[n] //convert the array to a heap for (int k = n / 2; k >= 1; k--) siftDown(num[k], num, k, n);

for (int k = n; k > 1; k--) { int item = num[k]; //extract current last item num[k] = num[1]; //move top of heap to current last node siftDown(item, num, 1, k-1); //restore heap properties from 1 to k- } } //end heapSort

We can test heapSort with Program P9.

Program P9.

import java.*; public class HeapSortTest { public static void main(String[] args) throws IOException { int[] num = {0, 37, 25, 43, 65, 48, 84, 73, 18, 79, 56, 69, 32}; int n = 12; heapSort(num, n); for (int h = 1; h <= n; h++) System.out("%d ", num[h]); System.out("\n"); }

public static void heapSort(int[] num, int n) { //sort num[1] to num[n] //convert the array to a heap for (int k = n / 2; k >= 1; k--) siftDown(num[k], num, k, n);

for (int k = n; k > 1; k--) { int item = num[k]; //extract current last item num[k] = num[1]; //move top of heap to current last node siftDown(item, num, 1, k-1); //restore heap properties from 1 to k- } } //end heapSort

public static void siftDown(int key, int[] num, int root, int last) { int bigger = 2 * root; while (bigger <= last) { //while there is at least one child if (bigger < last) //there is a right child as well; find the bigger if (num[bigger+1] > num[bigger]) bigger++; //'bigger' holds the index of the bigger child if (key >= num[bigger]) break; //key is smaller; promote num[bigger] num[root] = num[bigger]; root = bigger; bigger = 2 * root; }

Download from BookDL (bookdl)

num[root] = key; } //end siftDown

} //end class HeapSortTest

When run, Program P9 produces the following output (num[1] to num[12] sorted):

18 25 32 37 43 48 56 65 69 73 79 84

Programming note: As written, heapSort sorts an array assuming that n elements are stored from subscripts 1 to n. If they are stored from 0 to n-1, appropriate adjustments would have to be made. They would be based mainly on the following observations:

• The root is stored innum[0].

• The left child of nodeh is node 2h+1 if 2h+1 < n.

• The right child of nodeh is node 2h+2 if 2h+2 < n.

• The parent of nodeh is node (h–1)/2 (integer division).

• The last nonleaf node is(n–2)/2 (integer division).

You can verify these observations using the tree (n = 12) shown in Figure 9-6.

You are urged to rewrite heapSort so that it sorts the array num[0.-1]. As a hint, note that the only change required in siftDown is in the calculation of bigger. Instead of 2 * root, we now use 2 * root + 1.

9 Building a Heap Using siftUp

Consider the problem of adding a new node to an existing heap. Specifically, suppose num[1] to num[n] contain a heap. We want to add a new number, newKey, so that num[1] to num[n+1] contain a heap that includes newKey. We assume the array has room for the new key. For example, suppose we have the heap shown in Figure 9-7 and we want to add 40 to the heap. When the new number is added, the heap will contain 13 elements. We imagine 40 is placed in num[13] (but do not store it there, as yet) and compare it with its parent 43 in num[6]. Since 40 is smaller, the heap property is satisfied; we place 40 in num[13], and the process ends.

25

56 48

37

43

84 73

18 65

79

0

1 2

3 4 5

7 8 9 32

69

10 11

Figure 9-6. A binary tree stored in an array starting at 0

The process described is usually referred to as sifting up. We can rewrite this code as a function siftUp. We assume that siftUp is given an array heap[1.] such that heap[1.-1] contains a heap and heap[n] is to be sifted up so that heap[1.] contains a heap. In other words, heap[n] plays the role of newKey in the previous discussion. We show siftUp as part of Program P9, which creates a heap out of numbers stored in a file, heap.

Program P9.

import java.; import java.; public class SiftUpTest { final static int MaxHeapSize = 100; public static void main (String[] args) throws IOException { Scanner in = new Scanner(new FileReader("heap")); int[] num = new int[MaxHeapSize + 1]; int n = 0, number;

while (in()) { number = in(); if (n < MaxHeapSize) { //check if array has room num[++n] = number; siftUp(num, n); } }

for (int h = 1; h <= n; h++) System.out("%d ", num[h]); System.out("\n"); in(); } //end main

public static void siftUp(int[] heap, int n) { //heap[1] to heap[n-1] contain a heap //sifts up the value in heap[n] so that heap[1.] contains a heap int siftItem = heap[n]; int child = n; int parent = child / 2; while (parent > 0) { if (siftItem <= heap[parent]) break; heap[child] = heap[parent]; //move down parent child = parent; parent = child / 2; } heap[child] = siftItem; } //end siftUp

} //end class SiftUpTest

Suppose heap contains the following:

37 25 43 65 48 84 73 18 79 56 69 32

Program P9 will build the heap (described next) and print the following:

After 37 , 25 , and 43 are read, we will have Figure 9-9.

After 65 , 48 , 84 , and 73 are read, we will have Figure 9-10.

And after 18 , 79 , 56 , 69 , and 32 are read, we will have the final heap shown in Figure 9-11.

Note that the heap in Figure 9-11 is different from that of Figure 9-3 even though they are formed from the same numbers. What hasn’t changed is that the largest value, 84 , is at the root. If the values are already stored in an array num[1.], we can create a heap with the following:

####### 4 5 6

4 5 6

-

- for (int k = 2; k <= n; k++) siftUp(num, k);

-

-

-

-

-

-- 4 5 6

- Figure 9-10. Heap after processing 65, 48, 84,

-

-

-

-

-

-

- - - 4 5 6

- -- Figure 9-11. Final heap after processing 18, 79, 56, 69, -

- - - - - - - - - -- Figure 9-9. Heap after processing 37, 25,

We can think of priority as an integer—the bigger the integer, the higher the priority. Immediately, we can surmise that if we implement the queue as a max-heap, the item with the highest priority will be at the root, so it can be easily removed. Reorganizing the heap will simply involve “sifting down” the last item from the root. Adding an item will involve placing the item in the position after the current last one and sifting it up until it finds its correct position. To delete an arbitrary item from the queue, we will need to know its position. Deleting it will involve replacing it with the current last item and sifting it up or down to find its correct position. The heap will shrink by one item. If we change the priority of an item, we may need to sift it either up or down to find its correct position. Of course, it may also remain in its original position, depending on the change. In many situations (for example, a job queue on a multitasking computer), the priority of a job may increase over time so that it eventually gets served. In these situations, a job moves closer to the top of the heap with each change; thus, only sifting up is required. In a typical situation, information about the items in a priority queue is held in another structure that can be quickly searched, for example a binary search tree. One field in the node will contain the index of the item in the array used to implement the priority queue. Using the job queue example, suppose we want to add an item to the queue. We can search the tree by job number, say, and add the item to the tree. Its priority number is used to determine its position in the queue. This position is stored in the tree node. If, later, the priority changes, the item’s position in the queue is adjusted, and this new position is stored in the tree node. Note that adjusting this item may also involve changing the position of other items (as they move up or down the heap), and the tree will have to be updated for these items as well.

9 Sorting a List of Items with Quicksort

At the heart of quicksort is the notion of partitioning the list with respect to one of the values called a pivot. For example, suppose we are given the following list to be sorted:

We can partition it with respect to the first value, 53. This means placing 53 in such a position that all values to the left of it are smaller and all values to the right are greater than or equal to it. Shortly, we will describe an algorithm that will partition num as follows:

The value 53 is used as the pivot. It is placed in position 6. All values to the left of 53 are smaller than 53, and all values to the right are greater. The location in which the pivot is placed is called the division point (dp, say). By definition, 53 is in its final sorted position. If we can sort num[1.-1] and num[dp+1.], we would have sorted the entire list. But we can use the same process to sort these pieces, indicating that a recursive procedure is appropriate.

1

53 2

12 3

98 4

63 5

18 6

32 7

80

num

8

46 9

72 10

21

1

21 2

12 3

18 4

32 5

46 6

53 7

80

num

8

98 9

72 10

63

Assuming a function partition is available that partitions a given section of an array and returns the division point, we can write quicksort as follows:

public static void quicksort(int[] A, int lo, int hi) { //sorts A[lo] to A[hi] in ascending order if (lo < hi) { int dp = partition(A, lo, hi); quicksort(A, lo, dp-1); quicksort(A, dp+1, hi); } } //end quicksort

The call quicksort(num, 1, n) will sort num[1.] in ascending order. We now look at how partition may be written. Consider the following array:

We will partition it with respect to num[1], 53 (the pivot) by making one pass through the array. We will look at each number in turn. If it is bigger than the pivot, we do nothing. If it is smaller, we move it to the left side of the array. Initially, we set the variable lastSmall to 1 ; as the method proceeds, lastSmall will be the index of the last item that is known to be smaller than the pivot. We partition num as follows:

Compare 12 with 53 ; it is smaller, so add 1 to lastSmall (making it 2 ) and swap num[2] with itself.

Compare 98 with 53 ; it is bigger, so move on.

Compare 63 with 53 ; it is bigger, so move on.

Compare 18 with 53 ; it is smaller, so add 1 to lastSmall (making it 3 ) and swap num[3], 98 , with 18.

At this stage, we have this:

Compare 32 with 53 ; it is smaller, so add 1 to lastSmall (making it 4 ) and swap num[4], 63 , with 32.

Compare 80 with 53 ; it is bigger, so move on.

Compare 46 with 53 ; it is smaller, so add 1 to lastSmall (making it 5 ) and swap num[5], 98 , with 46.

At this stage, we have the following:

1

53 2

12 3

98 4

63 5

18 6

32 7

80

num

8

46 9

72 10

21

1

53 2

12 3

18 4

63 5

98 6

32 7

80

num

8

46 9

72 10

21

1

53 2

12 3

18 4

32 5

46 6

63 7

80

num

8

98 9

72 10

21

public static void swap(int[] list, int i, int j) { //swap list[i] and list[j] int hold = list[i]; list[i] = list[j]; list[j] = hold; }

} //end class QuicksortTest

When run, Program P9 produces the following output (num[1] to num[12] sorted):

18 25 32 37 43 48 56 65 69 73 79 84

Quicksort is one of those methods whose performance can range from very fast to very slow. Typically, it is of order O(nlog 2 n), and for random data, the number of comparisons varies between nlog 2 n and 3nlog 2 n. However, things can get worse. The idea behind partitioning is to break up the given portion into two fairly equal pieces. Whether this happens depends, to a large extent, on the value that is chosen as the pivot. In the function, we choose the first element as the pivot. This will work well in most cases, especially for random data. However, if the first element happens to be the smallest, the partitioning operation becomes almost useless since the division point will simply be the first position. The “left” piece will be empty, and the “right” piece will be only one element smaller than the given sublist. Similar remarks apply if the pivot is the largest element. While the algorithm will still work, it will be slowed considerably. For example, if the given array is sorted, quicksort will become as slow as selection sort. One way to avoid this problem is to choose a random element as the pivot, not merely the first one. While it is still possible that this method will choose the smallest (or the largest), that choice will be merely by chance. Yet another method is to choose the median of the first (A[lo]), last (A[hi]), and middle (A[(lo+hi)/2]) items as the pivot. You are advised to experiment with various ways of choosing the pivot. Our experiments showed that choosing a random element as the pivot was simple and effective, even for sorted data. In fact, in many cases, the method ran faster with sorted data than with random data, an unusual result for quicksort. One possible disadvantage of quicksort is that, depending on the actual data being sorted, the overhead of the recursive calls may be high. We will see how to minimize this in Section 9.5. On the plus side, quicksort uses very little extra storage. On the other hand, mergesort (which is also recursive) needs extra storage (the same size as the array being sorted) to facilitate the merging of sorted pieces. Heapsort has neither of these disadvantages. It is not recursive and uses very little extra storage. And, as noted in Section 9, heapsort is stable in that its performance is always at worst 2nlog 2 n, regardless of the order of the items in the given array.

9.5 Another Way to Partition

There are many ways to achieve the goal of partitioning—splitting the list into two parts such that the elements in the left part are smaller than the elements in the right part. Our first method, shown earlier, placed the pivot in its final position. For variety, we will look at another way to partition. While this method still partitions with respect to a pivot, it does not place the pivot in its final sorted position. As we will see, this is not a problem. Consider, again, the array num[1.] where n = 10.

1

53 2

12 3

98 4

63 5

18 6

32 7

80

num

8

46 9

72 10

21

We choose 53 as the pivot. The general idea is to scan from the right looking for a key that is smaller than, or equal to, the pivot. We then scan from the left for a key that is greater than, or equal to, the pivot. We swap these two values; this process effectively puts smaller values to the left and bigger values to the right. We use two variables, lo and hi, to mark our positions on the left and right. Initially, we set lo to 0 and hi to 11 (n+1). We then loop as follows:

Subtract 1 from hi (making it 10 ).

Compare num[hi], 21 , with 53 ; it is smaller, so stop scanning from the right with hi = 10.

Add 1 to lo (making it 1 ).

Compare num[lo], 53 , with 53 ; it is not smaller, so stop scanning from the left with lo = 1.

lo ( 1 ) is less than hi ( 10 ), so swap num[lo] and num[hi].

Subtract 1 from hi (making it 9 ).

Compare num[hi], 72 , with 53 ; it is bigger, so decrease hi (making it 8 ). Compare num[hi], 46 , with 53 ; it is smaller, so stop scanning from the right with hi = 8.

Add 1 to lo (making it 2 ).

Compare num[lo], 12 , with 53 ; it is smaller, so add 1 to lo (making it 3 ). Compare num[lo], 98 , with 53 ; it is bigger, so stop scanning from the left with lo = 3.

lo ( 3 ) is less than hi ( 8 ), so swap num[lo] and num[hi].

At this stage, we have lo = 3 , hi = 8 and num as follows:

Subtract 1 from hi (making it 7 ).

Compare num[hi], 80 , with 53 ; it is bigger, so decrease hi (making it 6 ). Compare num[hi], 32 , with 53 ; it is smaller, so stop scanning from the right with hi = 6.

Add 1 to lo (making it 4 ).

Compare num[lo], 63 , with 53 ; it is bigger, so stop scanning from the left with lo = 4.

lo ( 4 ) is less than hi ( 6 ), so swap num[lo] and num[hi], giving this:

Subtract 1 from hi (making it 5 ).

Compare num[hi], 18 , with 53 ; it is smaller, so stop scanning from the right with hi = 5.

Add 1 to lo (making it 5 ).

Compare num[lo], 18 , with 53 ; it is smaller, so add 1 to lo (making it 6 ). Compare num[lo], 63 , with 53 ; it is bigger, so stop scanning from the left with lo = 6.

lo ( 6 ) is not less than hi ( 5 ), so the algorithm ends.

1

21 2

12 3

46 4

63 5

18 6

32 7

80

num

8

98 9

72 10

53

1

21 2

12 3

46 4

32 5

18 6

63 7

80

num

8

98 9

72 10

53

Download from BookDL (bookdl)

For example, suppose we are sorting A[1.] and the first division point is 40. Assume we are using partition2, which does not put the pivot in its final sorted position. Thus, we must sort A[1.] and A[41.] to complete the sort. We will stack (41, 99) and deal with A[1.] (the shorter sublist) first. Suppose the division point for A[1.] is 25. We will stack (1, 25) and process A[26.] first. At this stage, we have two sublists—(41, 99) and (1, 25)—on the stack that remain to be sorted. Attempting to sort A[26.] will cause another sublist to be added to the stack, and so on. In our implementation, we will also add the shorter sublist to the stack, but this will be taken off immediately and processed. The result mentioned here assures us that there will never be more than log 2 99 = 7 (rounded up) elements on the stack at any given time. Even for n = 1,000,000, we are guaranteed that the number of stack items will not exceed 20. Of course, we will have to manipulate the stack ourselves. Each stack element will consist of two integers (left and right, say) meaning that the portion of the list from left to right remains to be sorted. We can define NodeData as follows:

class NodeData { int left, right;

public NodeData (int a, int b) { left = a; right = b; }

public static NodeData getRogueValue() {return new NodeData(-1, -1);}

} //end class NodeData

We will use the stack implementation from Section 4. We now write quicksort3 based on the previous discussion. It is shown as part of the self-contained Program P9. This program reads numbers from the file quick, sorts the numbers using quicksort3, and prints the sorted numbers, ten per line.

Program P9.

import java.; import java.; public class Quicksort3Test { final static int MaxNumbers = 100; public static void main (String[] args) throws IOException { Scanner in = new Scanner(new FileReader("quick")); int[] num = new int[MaxNumbers+1]; int n = 0, number;

while (in()) { number = in(); if (n < MaxNumbers) num[++n] = number; //store if array has room }

quicksort3(num, 1, n); for (int h = 1; h <= n; h++) { System.out("%d ", num[h]); if (h % 10 == 0) System.out("\n"); //print 10 numbers per line } System.out("\n"); } //end main

public static void quicksort3(int[] A, int lo, int hi) { Stack S = new Stack(); S(new NodeData(lo, hi)); int stackItems = 1, maxStackItems = 1;

while (!S()) { --stackItems; NodeData d = S(); if (d < d) { //if the sublist is > 1 element int dp = partition2(A, d, d); if (dp - d + 1 < d - dp) { //compare lengths of sublists S(new NodeData(dp+1, d)); S(new NodeData(d, dp)); } else { S(new NodeData(d, dp)); S(new NodeData(dp+1, d)); } stackItems += 2; //two items added to stack } //end if if (stackItems > maxStackItems) maxStackItems = stackItems; } //end while System.out("Max stack items: %d\n\n", maxStackItems); } //end quicksort

public static int partition2(int[] A, int lo, int hi) { //return dp such that A[lo.] <= A[dp+1.] int pivot = A[lo]; --lo; ++hi; while (lo < hi) { do --hi; while (A[hi] > pivot); do ++lo; while (A[lo] < pivot); if (lo < hi) swap(A, lo, hi); } return hi; } //end partition

public static void swap(int[] list, int i, int j) { //swap list[i] and list[j] int hold = list[i]; list[i] = list[j]; list[j] = hold; } //end swap

} //end class Quicksort3Test

class NodeData { int left, right;

Chapter 9 Pages From Advanced Topics in Java-Copy

Course: Java Programming (JP 01)

University: University of Kerala

- Discover more from: