- Information

- AI Chat

8.5 Gaussian BNs - senior

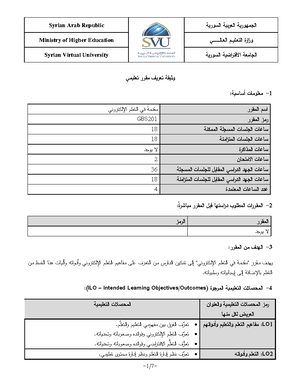

english 101 (Eng101)

University of Shendi

Preview text

1

Gaussian Bayesian Networks

Sargur Srihari srihari@cedar.buffalo

Topics

- / Linear Gaussian Model

- / Learning Parameters of a Gaussian Bayesian Network

- / Multivariate Gaussian representations 1./ Covariance Form 2./ Information Form: 1./ Bayesian Networks 2./ Markov Random Fields 2

Gaussian Bayesian Network

- / Definition: All variables are Gaussian and all CPDs are linear Gaussian

- / Let Y be a linear Gaussian of its parents X 1 ,..,Xk

- / i., mean of Y is a linear combination of means of Gaussian parents P(Y|X) ~ N(³ 0 +³tX;Ã 2 )

- / If X 1 ,.. are jointly normal N(¿;£) then the distribution of Y is normal N(¿Y;à 2 Y) where ¿Y=³ 0 +³t¿ and à 2 Y=à 2 +³t£³ The joint distribution over ( X ,Y) is normal with Cov X [ i : Y ]= ³ j £ i , j 4 j = 1 3 k

X 1 X 2 Xk

Y

Learning parameters of Gaussian

Bayesian Network

/ All variables are Gaussian

/ P ( X|u 1 ,. ) ~ N ( ³ 0 + ³ 1 u 1 +.. ³kuk; Ã 2 )

/ Task is to learn the parameters » X| U = { ³ 0 ,³ 1 ,.. ³k ,Ã 2 }

/ To find ML estimate of parameters, define log- likelihood

/ Closed-form solutions for parameters 5

U 1 U 2 Uk

X

log LX ( » X | U : D )= £ 212 log 2( ÃÃ 2 ) 221 Ã 2 ( ³ 0 + ³ 1 u 1 [ m ]+.. ³kuk [ m ] 2 x [ m ]) 2

£

££ § §

§§ 3 m

Solving Linear Equations

Matrix Solution: Therefore x=A-1b Ax=b

Gaussian Elimination

Estimating variance

/ Taking derivative of likelihood and setting to zero, we get

/where

/ First term is the empirical variance of X

/ Other terms are empirical covariances of inputs

8

à 2 = CovD £££ X ; X §§§ 23 i 3 j ³i³jCovD £££ Ui ; Uj §§§

U 1 U 2 Uk

X

ED £££ X §§§= M 1 x m £££ §§§ 3 m

CovD £££ X ; Y §§§= ED £££ X ç Y §§§ 2 ED £££ X §§§ç ED Y £££ §§§ ED X ç Y

£££ §§§= M 13 m x m £££ §§§ y m £££ §§§

Multivariate Gaussian

- / Three equivalent representations 1./ Basic parameterization (covariance form)

2./ Gaussian Bayesian network (information form) - / Conversion to Information form (precision matrix) captures conditional independencies 3./ Gaussian Markov Random Field - / Easiest conversion 10

p ( ) x = ( ) 2 à d 1 /2 £1/2 exp (££ x 2 μ) t £ 21 ( x 2 μ)¤§

μ=£ 2143 £

££ £

§ §

§§ § £=

42252225 22 25 8

£ £

££ £

§ §

§§ §

xx 1 is negatively correlated with x 3 2 is negatively correlated with x 3

¿ £ =E=E [[ xxx ] t ] -E [ x ] E [ x ] t

Gaussian Distribution Operations

- / There are two main operations we wish to perform on a distribution: 1./ Marginal distribution over some subset Y 2./ Conditioning the distribution on some Z=z

- / Marginalization is trivially performed in the covariance form

- / Conditioning a Gaussian is very easy to perform in the information form 11

Determining Conditional

Distribution using Covariance Form

/ Let ( X ,Y) have a normal distribution defined by

/ Then marginal distribution P(Y| X ) is given by P ( Y| X ) ~ N ( ³ 0 +³T X ; Ã 2 ) where

/ This allows us to take a joint Gaussian distribution and construct a Bayesian network 13

p ( X , Y )= N »¿¿ μμ XY ¿£¿;£ ££ XXYX ££ XYYY £

££ § §

» §§ ¿¿¿

¿ £¿¿

³ 0 =μ Y 2£ Y X £ 2 XX 1 μ X ³ =£ XX 21 £ Y X Ã 2 =£ YY 2£ Y X £ 2 XX 1 £ X Y

Constructing a BN from a Gaussian

- / Definition of I-map

- / I is set of independence assertions of form (X | Y/ Z),

which are true of distribution P

3 /G is an I-map of P implies:

- / Minimal I-map: removal of a single edge renders the graph not an I-map

- / I is set of independence assertions of form (X | Y/ Z),

which are true of distribution P

3 /G is an I-map of P implies:

- / Algorithm uses the principle that for Z to be parents of node X , (X | Y / Z ) where Y =Ç- Z - /need to check if P(X| Z ) is independent of P(Yi| Z ) - / Can be done using deviance measure: Ç 2 or mutual info (KL-div) - / Simpler Method: use Information form of Gaussian

IG ¦ I

d Ç 2 ( D )= x 3 i , xj ( M [ xi , xMj ]ç 2 P ˆ M ( xi ç) P ˆç( P ˆ x ( ix )ç j ) P ˆ( xj )) 2 dI ( D )= M 1 x 3 i , xjM [ xi , xj ]log MM [[ xix ] iM , x [ j ] xj ]

Information form gives edges of BN

/ Standard Form

/ Using Precision J=£-

/Since last term is constant, we get information form

/ J ¿ is called the potential vector

/ Ji,j=0 iff Xi is independent of Xj given Ç-{Xi,Xj}

/Example

/ Since J1,3=0, x 1 and x 3 are independent given x 2

/Non-zero entries define edges (non-correlations)

p ( ) x =( ) 2 à d 1 /2 £1/2exp (££ x 2 μ) t £ 21 ( x 2 μ)¤§

212 ( x 2 μ) t £ 21 ( x 2 μ)= 212 ( x 2 μ) t J x ( 2 μ) = 212 ££ xtJx 22 xtJ μ+μ tJ 줧

p x ( ) ³ exp»¿¿ 212 xtJx +( ) J μ tx ¿£¿

μ=£ 2143 £

££ £

§ §

§§ § £=

42252225 22 25 8

£ £

££ £

§ §

§§ § J =

0 2 0 0 0 2 0 0 0 0 0.

£ £

££ £

§ §

§§ §

Gaussian Markov Random Fields

/ Follows directly from information form

/which is obtained from covariance form with J= £ -

/ Break-up exponent into two types of terms

- /Using the potential vector h =J ¿

- /Terms involving single variable Xi

- / Called node potentials Terms involving pairs of variables Xi, Xj

/ Called edge potentials (when Ji,j=0 , there is no edge) 17

p ( )x ³ exp 2

» 12 x tJ x+( J μ ) t x ¿¿¿¿¿

¿ £¿¿¿¿ p ( ) x =( ) 2 à d 1 /2£1/2exp (££ x 2 μ) t £ 21 ( x 2 μ)¤§

2

12 Ji , ixi 2 + hixi

2

1 2 £££ Ji , jxixj + Jj , ixjxi §§§= 2 Ji , jxixj

8.5 Gaussian BNs - senior

Course: english 101 (Eng101)

University: University of Shendi